This year’s RT2 took place from May 21-23, 2025 on UC Berkeley’s Campus. BITSS Program Manager Jo Weech shares common questions from 28 early-career researchers who are working to implement open science practices in their own research.

The academic community has long reckoned with epistemic challenges, from the reproducibility to the underreporting of null results, underpowered research designs, and lack of data transparency, among others. Published estimates of reproducibility still vary widely—from as low as 16% to as high as 43%—reflecting deep, unresolved gaps in research transparency and replication (Chang & Li 2015; Gertler et al. 2018). Thanks to the efforts of BITSS and our colleagues at the Center for Open Science, I4R, preprint communities, and beyond, we now have an impressive suite of tools, research methods, and publication standards that—if widely adopted—stand to revolutionize the quality and credibility of scientific research.

BITSS launched its flagship Research Transparency and Reproducibility Training (RT2) in 2016 to ensure that social scientists across disciplines and geographies have access to the tools and resources they need to practice good science. Since then, the initiative has trained over 3,000 scholars from over 30 countries in topics such as threats to research credibility, research design specification, tools for research reproducibility, evidence synthesis, and open science software . The curriculum has evolved along with the open science movement; more recent courses have incorporated new tools like OSF and GitHub integrations.

To newer researchers, these tools may seem to make intuitive sense. Why weren’t we pre-registering research designs before? Doesn’t it benefit all of us to make data publicly available, to the extent that we can? It is heartening to see a new generation of researchers putting these tools into practice. This year’s RT2 featured 28 early-career researchers who are either in the process of getting their PhD, recently got their PhD, or are research practitioners. They study at institutions in Australia, the Netherlands, Nigeria, and in the United States across disciplines including economics, political science, epidemiology, and transportation studies. In their applications and pre-course survey, they shared their interest and prior experience with research transparency. According to one participant:

“Open science is a must. It is more than a concept; it is a movement and an instrument of social and educational justice needed to promote global equity and inclusion.”

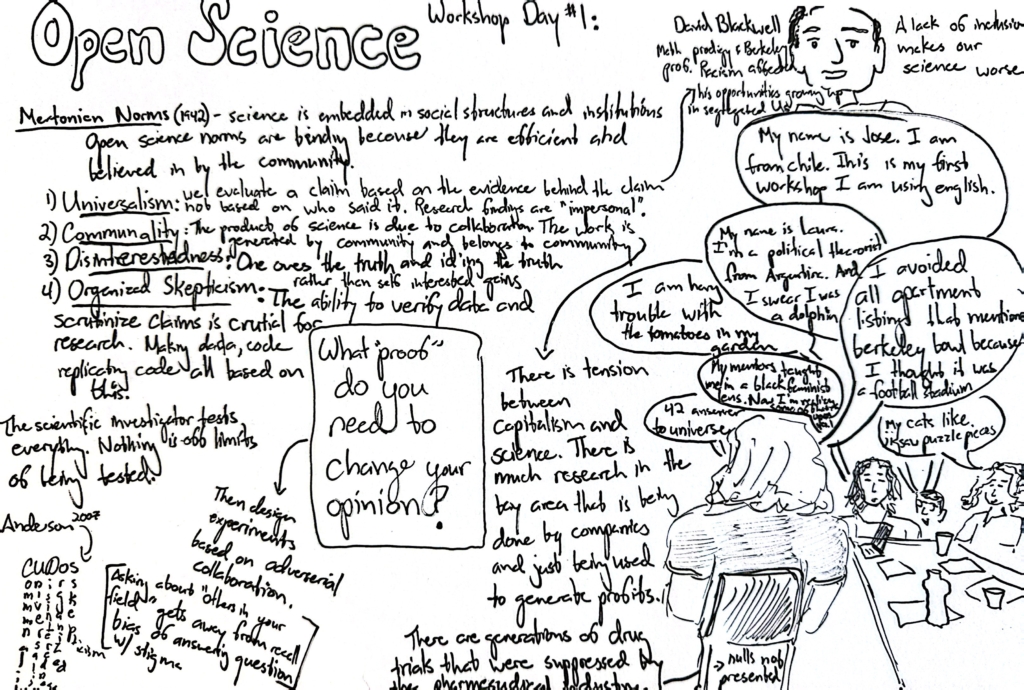

The three-day workshop combined theoretical presentations with hands-on sessions, where participants worked through real-time coding exercises in addition to open discussions. Edward Miguel kicked off the training with a session on the scientific ethos, misconduct, and transparency, while Mario Malički from Stanford discussed the role of journals in advancing transparency.

Participants learned practical skills including version control with GitHub, power analysis through the MIDA framework with Alexander Coppock from Yale, and creating pre-analysis plans for experimental designs.Thomas Dee from Stanford addressed pre-registration for quasi-experimental designs, while Jade Benjamin Chung discussed lessons from the medical field on pre-analysis plans and peer review. Sessions on research ethics covered returning results to study participants, and Fernando Hoces de la Guardia presented on standardizing the reporting of research results in pre-registered studies.

The Hard Questions: Implementing Open Science Tools

There is still a long way to go before open science methods and tools become the norm. And there are still many questions facing the open science community. Our participants brought many of these issues up. For example, it is accepted best practice to pre-register experimental studies. But what about quasi-experimental designs? How can we pre-register quasi-experimental studies while still allowing for participatory research, driven by the subjects in the research team or study? Or for ethnographic research or exploratory studies, which don’t start with the outcomes in mind? What about data privacy? Are there times when it may be inappropriate to make data publicly available? Or, where can researchers go in order to find best practices for de-identifying data?

A larger concern about implementing open science is the demand that it places on the time of researchers. Researchers are already largely time constrained, given the amount of work it takes to plan a high quality study, to work with advisors and collaborators, to transform and analyze large data sets, and to eventually prepare a paper for publication. Placing additional burdens on resource constrained researchers might end up having the unintended consequence of making research more exclusive instead of less. Raising awareness of this potential threat, and coming up with potential solutions was also an important part of the workshop.

Which takes us all back to RT2. I was incredibly inspired by this year’s participants. They are taking open science practices very seriously and coming up with novel ways to make sure that their work is transparent and reproducible, regardless of the country where their data is being gathered or of the details of their study design.

It is possible to put all of these principles into practice and to ensure that our research findings are credible, rigorous, and accessible to the global community. I am very impressed by their drive—it’s a reminder of how exciting it can be to embark on a new project, or to improve upon an existing project that you believe in. I learned a lot from their research presentations and individual projects, in addition to the presentations from our selected speakers. As mentioned earlier, academic research faces a challenge in terms of resources and credibility. It may be difficult to address the resources challenge, but there is work that we can do to enhance the credibility and quality of our research

In terms of next steps, we’re looking forward to seeing what comes out of their research projects. We will also be opening a call for proposals later this summer for funding to conduct research transparency workshops in other institutions. These are our Catalyst grants, which have funded projects in institutions and organizations around the world. Until then, we cannot say thank you enough to this year’s RT2 cohort—we’re excited to have you as members of the BITSS community!

You can view the complete RT2 agenda and presentation slides here.