The Royal Society’s motto, “Take nobody’s word for it,” reflects a key principle of scientific inquiry: as researchers, we aspire to discuss ideas in the open, to examine our analyses critically, to learn from our mistakes, and to constantly improve. This type of thinking shouldn’t guide only the creation of rigorous evidence — rather, it should extend to the work of policy analysts whose findings may affect very large numbers of people. At the end of the day, a commitment to scientific rigor in public policy analysis is the only durable response to potential attacks on credibility.

We, the three authors of this blog — Fernando Hoces de la Guardia, Sean Grant, and Ted Miguel — recently published a working paper suggesting a parallel between the reproducibility crisis in social science and observed threats to the credibility of public policy analysis. Researchers and policy analysts both perform empirical analyses; have a large amount of undisclosed flexibility when collecting, analyzing, and reporting data; and may face strong incentives to obtaining “desired” results (for example, p-values of <0.05 in research, or large negative/positive effects in policy analysis).

Social science is responding to its credibility crisis with a suite of principles and practices collectively termed open science. Examples include posting data and code, pre-registering analysis plans, and the use of tools that increase reproducibility, like dynamic documents and version control . Policy analysis, on the other hand, has yet to confront some of the questionable research practices that undermine its credibility.

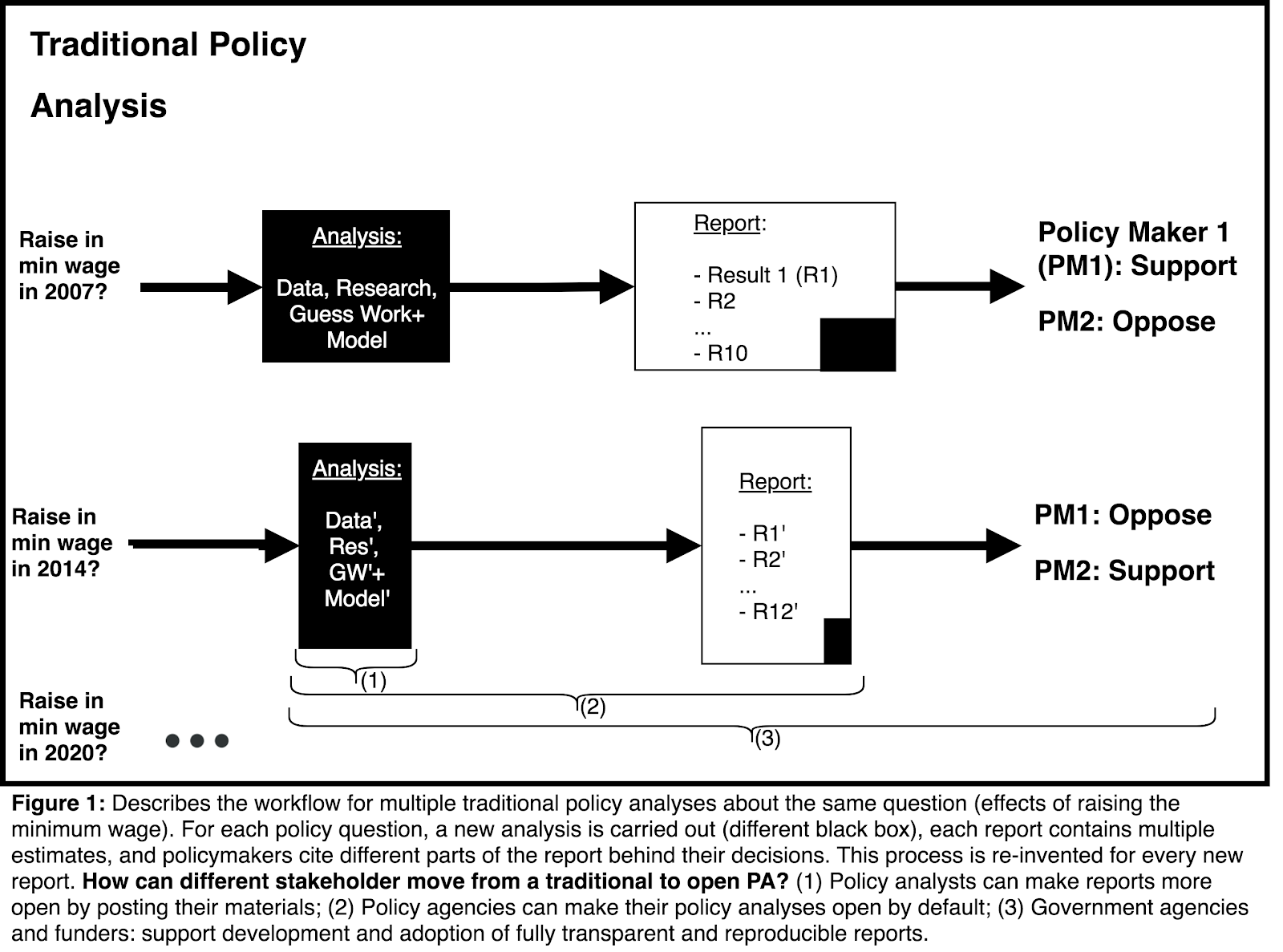

When we talk about policy analysis, we mean the work of government agencies, think tanks, and academics to assess how specific policies or reforms affect people and the economy. These assessments are meant to be objective and credible, but they don’t always turn out this way. Two recent examples — both of which gained considerable media attention — were the 2017 Congressional Budget Office (CBO) report on the effects of proposed healthcare reform in the US, and the 2018 Doing Business report published by the World Bank. For different reasons, the quality of each study was widely questioned, and each organization was able to partially fend off attacks by appealing to their reputation. This appeal to reputation (rather than scientific methods) is neither sufficient nor durable as a defense.

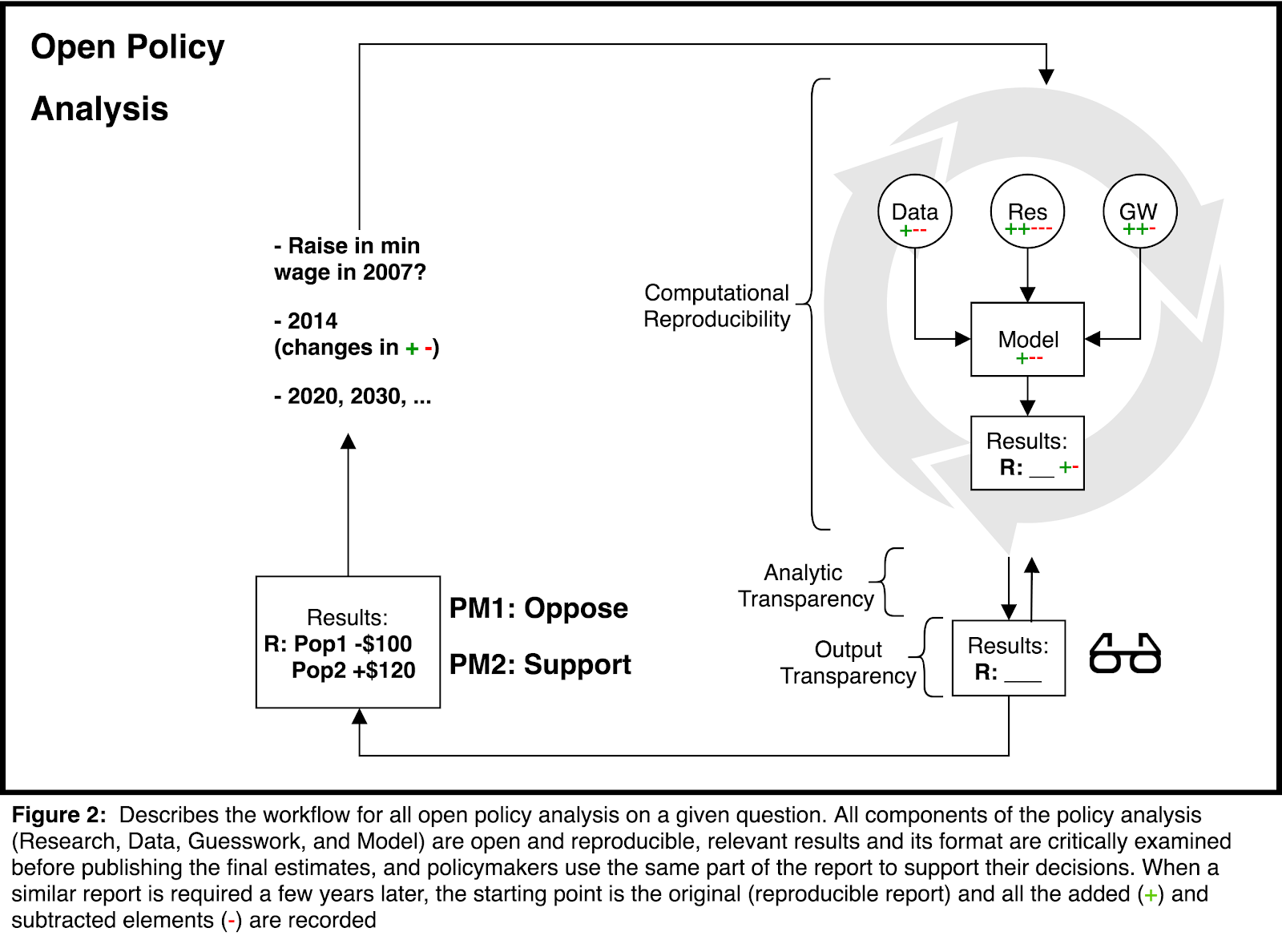

Policy analysis can be opened up in much the same way that we have opened up empirical social science research. We suggest three guiding principles for open policy analysis:

- Computational Reproducibility: analysts within an organization should be able to obtain the materials (raw data, code and docs) used in a report, and reproduce the final output from scratch with minimal effort.

- Analytic Transparency: Experts outside the organization should have access to the same resources for computational reproducibility. Strong emphasis should be placed on readability of the report and background code/spreadsheets.

- Output Transparency: Agencies should first publish their reports without the results for the expert community to assess. After a short period of review, a clear and pre-specified output may be published for policy makers to discuss.

Open Policy Analysis in Practice:

Recently, one of us (Fernando) had the opportunity to present our paper to the Congressional Budget Office, where it was well received. Among the issues discussed was the scenario in which a highly reputable policy agency shares their code and data, opening up the possibility of another agency adapting it for its own purposes. In such cases, to mitigate reputational risk, it’s important we are able to clearly distinguish between “certified” and “tailored” models. One approach would be to create a badge system like that developed by some scientific journals. But more importantly, we are eager to start a conversation with key stakeholders regarding how best to bring open science into this field.

It is really exciting to see the promise of research transparency gaining traction within policy analysis world. The stakes could not be higher in terms of the implications for human lives. The emergence of a suite of new transparency tools and approaches in the social sciences suggests that the timing is right for a parallel movement in policy analysis.

This post is co-authored by Fernando Hoces de la Guardia, BITSS postdoctoral scholar, along with Sean Grant (Associate Behavioral and Social Scientist at RAND) and BITSS Faculty Director Ted Miguel. It is cross-posted with the CEGA Blog.

About the authors: Fernando Hoces de la

Guardia is a postdoctoral scholar at BITSS.

Sean Grant is a behavioraland social scientist

at the nonprofit, nonpartisan RAND

Corporation. Ted Miguel is the Faculty Director

of BITSS and CEGA, and Oxfam

Professor of Economics at UC Berkeley.

This matter of transparency and reproducibility of Policy Analysis a great compliment to the already spreading movement of research transparency and reproducibility. We can actual start the next one on transparency on policy implementation, why not?

Thanks Ted, Fernando and Sean!